Building a search engine from scratch

Halvor Bø27.03.2022

So why is Google so successful? Google helps people find what they’re looking for better than any other search engine.

Finding what people are looking for is the bread and butter of a search engine. The infamous Youtube “rabbit hole” initiates with a single search in the YouTube search bar or searching for movies on Netflix on a Saturday night. These are examples of powerful search engines that direct users to their deepest desires, in the form of content.

In this post, we will explain the core components of search engines and understand how they work together by building a basic search engine from scratch.

Target audience

- People trying to understand search engines.

- People interested in databases -> a lot of the content in this article is also relevant for databases in general.

- People trying to learn more about DSLs -> some of the content here shows how to build a simple DSL compiler.

- People wanting to learn more about algorithms -> these algorithms show complexity.

What will we build?

- Given the length of the article

- If you’re curious of what we can learn.

- All these queries work in Google https://ahrefs.com/blog/google-advanced-search-operators/

- Follows the basic structure of real search engines like ES. The process is more complex in reality, but this prototype should help you understand.

How to use this article?

- This article is quite long, and you can jump around.

- Below is a table of contents.

Chapter 1: What is a search engine?

Before jumping into the code, we’ll explain the process behind search engines. Let’s use the example of a search engine for the web - like Bing.

Web search engines are a combination of different components, including state-of-the-art search algorithms and ranking techniques. Millions of servers scrape websites, store and aggregate content, and perform complex matrix calculations to return relevant search results.

Search engine algorithms aim to deduce a relevant collection of high-quality search results that accurately reflect the user’s search intent, usually in milliseconds.

However, the search starts way before the user enters the search query in the browser. Let’s develop a better understanding of the steps a search engine follows to return high-quality results.

1. Collecting the content we want to search

Data is snowballing, with worldwide data volumes expected to reach 181 zettabytes by 2025. Back in the day, the majority of data was structured, which was easily processed by search engines. Today, industry experts believe that 80%–90% of data is unstructured, which is difficult to understand for search engines.

Search engines are continuously evolving to adapt to the changing data trends. They look through the entire data on the web (or most) and decide whether or not it is searchable. They do so via web crawling, a search engine process that uses web crawlers or web spiders (bots) to understand the content on a web page and make it retrievable.

Because the web is massive, crawlers have to start somewhere and be guided properly. Web crawlers check the website’s robot.txt file, which contains policies, rules, and sitemaps that allow web crawlers to access and discover all linked web pages. Web crawlers download all discovered web pages to the search engine’s servers for further processing. Check out this robots.txt file from Cloudflare.

Search engines servers use different databases to store the crawled web content. Most often, these are distributed non-relational databases that offer speed, reliability, and scalability to streamline the search process. Besides indexing, search engine databases support logs, metrics, application events, and full-text search (discussed below).

2. Organizing the content to be easily searchable

Now that we have PBs on PBs of unstructured content, how do we make it searchable? The answer is building an index.Do you know how search engines immediately respond to your queries? Search engines like Google crawl billions of web pages and bring them back to the servers, where they are organized in the form of indexes.

What is an index?

An index is like a digital catalog or dictionary of terms that takes note of a web page’s main keywords, content relevance, overall website freshness, content structuring, and various other parameters to make the web page available for searching. It aims at optimizing performance and speed in finding relevant documents for the search query.

Moreover, indexing saves plenty of time and computing power. With indexing, search engines don’t need to search every document. For example, a query with 15,000 searchable documents can return results in milliseconds with indexing. On the contrary, a sequential scan of every word in 15,000 documents will take hours.

Textbook indexes are a great analogy for search engine indexing. A textbook index provides a roadmap to the book, which lists names, places, and resources in alphabetical order, and assigns them page numbers. The following example demonstrates indexing of a cookbook:

Corn Soup………………………page 10

Chicken Curry…………………page 39

Mutton Roast….……………..page 101

The index of a cookbook is a mapping between phrases or words in the book. Indexing in search engines is also similar. Search engines create a mapping between terms and occurrences of terms. Do you like to read the entire cookbook to search the Motton Roast receipt? Certainly not, as it is cumbersome and time-wasting. Likewise, it’s inefficient for search engines to scan a plethora of documents every time they are asked to identify the occurrences of a term.

Once indexed, the content is available for search via a web browser. However, search engines like Google have hundreds of billions of indexed web pages containing almost all words available on the internet to enable full-text search.

How can we use indices to speed up text searches?

We now understand the concept of an index. When searching text a normal index is not necessarily that useful. We use something called an inverted index. What that allows us to do is to estimate how useful a term is.Full-text search is a comprehensive search technique that allows a search engine to match the search query against every word in a document or stored text. Web search engines commonly use it to display the most relevant results.

An example of an algorithm that works with inverted indexes and not with normal indexes is TF-IDF (or Term Frequency-Inverse Document Frequency). (Elasticsearch is a notable example of full-text search. It uses an algorithm called TF-IDF (Term Frequency-Inverse Document Frequency) is an algorithm thatwhich scores a document based on the occurrence of a term that appears in the document. Greater TF-IDF scores represent a greater similarity between the query and the document, weight by how likely they were to be similar. higher scores for a document with more tendency to appear in the top search results.

Due to the massive size of the global search index, searching becomes very slow. So, search engines (including Google) use inverted indexes, a data structure that maintains or maps (index) web content including documents, text, images, and videos, allowing quick retrieval of information from the search engine server.

Below is a simple demonstration of the inverted index data structure. Each keyword or token is associated with a row of documents (web pages in our case) in which that token was found. Instead of URLs, the inverted index usually contains document ids for each token. However, we are using web page URLs to develop a better understanding of inverted indexes.

Search engines do a lot more than full-text search, like web page ranking. The most notable example of web page ranking is Google’s PageRank.

What else can be added to an indexother techniques are used for search engines?

There is more to a website than text. We can for example look at how different webpages interact.

PageRank was Google’s first web page ranking system that counted the number and quality of external web links referencing a web page to estimate its importance. The underlying assumption was that important websites are likely to receive more links from external websites.

However, Google’s web page ranking system has undergone many changes over the years. Today, in addition to the web page ranking techniques, Google uses advanced AI algorithms like BERT to understand search queries better and improve search results.

These techniques can easily be added on to text search, but they are outside the scope of what we’re covering in this article.

3. Searching PBs in milliseconds

Web crawling and indexing are the two primary web search engine components. Once a search engine index is built, it is updated frequently to capture new web content by crawling it and adding new tokens to the search engine index. Different search engines have varying time ranges to update indexes with new web content. Given the ever-increasing size of the internet, it may take from a few days to a few weeks. Figure 1 demonstrates the cycle of various search engine processes.

However, Google’s web page ranking system has undergone many changes over the years. Today, in addition to the web page ranking techniques, Google uses advanced AI algorithms like BERT to understand search queries better and improve search results.

After the web content is indexed, it is available to be searched by an internet user. An internet user can enter a search query using web browsers or web clients like Chrome, Firefox, or Safari, and the underlying search engine would return web pages that are most relevant to the searched term.

Summary

So far, we have covered the fundamentals of search engines. From here, the process will get more complex, and we will use some technical jargon to aid our explanation of building a search engine from scratch. Strap on for the ride.

Chapter 2: How can we build a search engine prototype?

Building a global search engine like Google or Bing is quite challenging and takes years of development effort from thousands of engineers. Before we start writing the code for building our search engine from scratch, let’s simplify the process to fit the entire process within this blog post.

Assumptions

For building a simpler search engine, we have made the following assumptions.

- Our search engine only supports a limited set of query types described in the table below.

- Our search engine only supports simple querying rather than incremental querying, which means that all data will be indexed before a user can start searching content.

- We will use the simplest data structure to build the search engine from scratch.

- We ignore advanced search engine topics like query planning, ASTs, and compression.

Jargon Dictionary

Let’s summarize the search engine technical jargon in the table below:

Term

Description

Document

Set of words (usually a web page). See the sample document from Shia LeBeouf’s outrageous motivational speech below. We’ll use this speech for building our search engine.

Term

Most commonly, it’s a single word in the document. For example, “life” or “search.”

Token

The frequency of the term in the document. For example, “work” is a token that appears three times on a certain web page.

Incremental indexing

In full-text search, search engines support adding documents (web pages) to the index and allow querying while indexing. This is referred to as incremental querying.

Normal index

Used by the database where it maps the search query exactly against the search engine index.

Full-text search

A method that compares every term in a search query with every word in a document.

Inverted index

A specialized data structure containing all the indexes and available tokens to enable search.

Sample Document for Our Search Engine

We have used three speeches for building this search engine. The following text (Shia LeBeouf’s speech) demonstrates a single document:

Do it. Just do it. Don’t let your dreams be dreams. Yesterday, you said tomorrow. So just do it. Make your dreams come true. Just do it. Some people dream of success, while you’re gonna wake up and work hard at it. Nothing is impossible. You should get to the point where anyone else would quit, and you’re not gonna stop there. No, what are you waiting for? Do it! Just do it! Yes, you can. Just do it. If you’re tired of starting over, stop giving up.

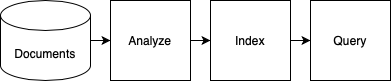

Algorithm for searching documents

Our search engine has a simple pipeline. We will not crawl the content as we already have our three sample documents. We’ll split the document into tokens and insert them into inverted-index, which will allow users to query the sample documents. The search engine pipeline involves the following steps:

- Document in

- Indexing

- Analyze (Preprocessing)

- Build index

- Query

- Searching algorithm

- Evaluation algorithm

- Score calculation

- Global state update

- Final results

We have our sample documents. Now, we need to structure the documents, store them, and build an index to make them easier to search.

Normal indexing is efficient for searching the exact phrase in a single document and returns the exact match or range of search results. However, to build a realistic search engine, we need an algorithm to perform full-text search on billions of documents (three, in our case).

The table below demonstrates the capabilities of full-text search. It uses various search operators to identify the search query intent.

To build a simple search engine, we do not need to support all of these search queries. However, they’ll make the core pipeline look more like a real search engine. Let’s start the process.

Implementing and understanding the algorithm for building an index

1. Collecting the documents

The first step in building a search engine is to collect documents (speeches in this context). A document is a unit of searching in the full-text search technique. You can search for particular words, sequences of words, or sets of words. A full-text query matches a complete word instead of just a part of a string. For instance, a full-text search for “ledge” will not match a piece of text that comprises the word “acknowledge” but will match a substring “ledge” only.

The search engine code in this article is written using Python programming language. The following code snippet can read speeches or documents from files on disk.

documents = read_speaches_from_disk()

2. Analyzing the text

The second step in the pipeline is analyzing the document and tokenizing it. Tokenization splits text into tokens both during indexing and querying. Tokens are, in fact, the basic units for identifying matches between search queries and records.

Full-text search matching is usually case-sensitive, so the text that is being searched has to match the case of the search term. The word “ledge” will not match “Ledge,” “leDge,” “LEdge,” and so on.

For this, we’ll convert the document to lowercase and extract all the words (terms) using regular expression. The extracted tokens will be used to create the inverted index.

The following code snippet tokenizes a document based on the defined regular expression used to convert the document to lower case. Then prints the tokens by looping on the tokenized documents. Here the tokens for three documents are displayed.

def tokenize(document: str) -> List[str]:

# convert the document to lowercase

lowercase_document = document.lower()

# extract all the words using a regex

words = re.findall(r"\w+", lowercase_document)

return list(words)

tokenized_documents = [tokenize(document) for document in documents]

for tokenized_document in tokenized_documents:

print(tokenized_document[:3], "...", tokenized_document[-3:])3. Building the indices

Once all documents are tokenized, we can create an inverted index from them by matching each token with the tokenized documents. To make the exact matching more efficient, we store the positions of terms instead of the number of occurrences in documents.

Since we are using Python to build our search engine, Python lists are dynamic and can automatically keep track of the length (number of occurrences). So, we don’t need a separate data structure to record the count of occurrences for each term.

The following code snippet iterates over the tokenized documents and matches each token to record its document id and token index to build the inverted index.

for document_id, tokenized_document in enumerate(tokenized_documents):

for token_index, token in enumerate(tokenized_document):

token_position = (document_id, token_index)

if token in inverted_index:

inverted_index[token].append(token_position)

else:

inverted_index[token] = [token_position]

print("just ->", inverted_index["the"])

print("do ->", inverted_index["the"])

Output:

just -> [(1, 0), (1, 5), (1, 20), (1, 28), (1, 76), (1, 82)]

do -> [(0, 94), (0, 399), (0, 455), (0, 493), (1, 1), (1, 3), (1, 6), (1, 21), (1, 29), (1, 74), (1, 77), (1, 83), (2, 15), (2, 33), (2, 44)]3. The SQL of text search

The final step in building the search engine pipeline is preparing logic to query the inverted index. Our search engine supports querying the inverted index with exact string matches. Additionally, it supports the following query operators.

Operator

Description

AND

When there is an AND operator between two terms, the search engine returns the documents containing a match for both terms.

OR

The search engine considers OR operator if there is no operator between two terms, which implies returning search results for either term in the search query.

INCLUDE

If a term contains “+” before it, the search engine excludes all the documents that miss that term.

EXCLUDE

If a term contains “-” before it, the search engine excludes all the documents that contain that term.

Scoring a query

The querying phase has two main algorithms, i.e., the searching algorithm and the evaluation algorithm. The searching algorithm uses the operators mentioned in the table above to filter the documents and keep track of the relevance scores for each document. The evaluation algorithm computes the document score and updates the global document relevance state that is initialized in the searching algorithm. Before explaining the two algorithms, let’s discuss the document relevance scoring mechanism in detail.

Scoring determines the relevance of retrieved documents based on the query of a user, term frequency, and several other critical parameters. For example, Elasticsearch enriches the Lucene scoring technique with Boolean Model to identify matching documents.

In addition, a practical scoring function is utilized to calculate the relevance, which employs an algorithm named TF/IDF (Term Frequency/Inverse Document Frequency) and a Vector Space Model. Lastly, Lucene combines them into a single reliable package that gathers matching documents and scores them based on search results.

Sometimes, a result cannot be relevant even when the exact term is matched. For example, if you search an “apple,” a search doesn’t ensure whether it is the apple company or an apple fruit. Under such circumstances, search engines involve filtering matches by index, by document type, or by applying some personalized or contextual logic.

The Boolean Model involves conditions, including AND, OR, and NOT. These conditions help in matching the documents against the search query. Look at the following example of a search query based on the Boolean Model:

Everyone AND loves AND cricket AND (Soccer OR Hockey)

This query will match only the documents that comprise all the terms given in the query, including Everyone, loves, cricket, and either Soccer or Hockey.

Now that we have explained how scoring works, let’s explain the searching and evaluation algorithms to understand how the search engine executes search queries to return relevant results.

How do we parse the query?

Searching algorithm starts by extracting a list of terms and operators by splitting the search query string. It parses the query from left to right to identify INCLUDED and EXCLUDED documents. It also keeps track of the document scores, which are later updated by the evaluation algorithm. Lastly, it sorts and returns the document IDs based on their relevance score and INCLUDE/EXCLUDE filters.

The following code snippet parses the search query to separate query terms and operators and initializes document scores.

query_expressions = re.findall(r"[\"\-\+]|[\w]+", query)

# Output for query: do AND "you can" -tomorrow

['do', 'AND', '"', 'you', 'can', '"', '-', 'tomorrow']This is our AST, we don’t need anything more advanced. The reason why we don’t support nexting.

Scoring the documents

The code snippet below demonstrates the evaluation algorithm used in this search engine. The evaluation algorithm has an operator mode and a pointer to iterate the search query. The mode changes its value when the pointer iterates through an operator (AND, OR, INCLUDE, EXCLUDE) in the search query. Based on the current term, the algorithm updates the global relevancy scores for each document, as shown in the code snippet below.

— CODE language-python —document_scores: DocumentScores = {}

excluded_document_ids: Set[DocumentId] = set()

included_document_ids: Set[DocumentId] = set()

mode = Mode.OR

pointer = 0

while pointer < len(query_expressions):

query_expression = query_expressions[pointer]

if query_expression in MODES:

# set mode

else:

if query_expression == QUOTE:

# handle EXACT CASE

new_document_scores = ...

# set pointer to the location of the closing quote

else:

# handle TERM CASE

new_document_scores = ...

# update the global variables variables

# using new_document_scores and the mode

# AND, OR, INCLUDE, EXCLUDE

pointer += 1

# compute the final scores

# filter out based on exclusion and inclusionThe final document scores are computed based on the term cases defined below.

Term Case

Get all token positions from the inverted index to calculate the token score. It uses the term_score() method to calculate the frequency of term occurring in the document.

def term_scores(token_positions: TokenPositions) -> DocumentScores:

document_term_scores: DocumentScores = {}

for document_id, _ in token_positions:

if document_id in document_term_scores:

document_term_scores[document_id] += 1

else:

document_term_scores[document_id] = 1

return document_term_scores

token_positions = inverted_index[term]

document_term_scores = term_scores(token_positions)Output:

document_term_scores = {1: 10, 5: 3, 5: 3}Exact Case

Matching the exact search term is a bit challenging. An exact match can have multiple words. It requires finding all token locations that match the exact term. The code snippet below shares a simplified version of exact case matching.

document_scores: DocumentScores = {}

excluded_document_ids: Set[DocumentId] = set()

included_document_ids: Set[DocumentId] = set()

token_positions = inverted_index[term]

document_term_scores = term_scores(token_positions)

pointer += 1

matches = [(document_id, token_position + 1)

for document_id, token_position in inverted_index.get(query_expressions[pointer], [])]

pointer += 1

while query_expressions[pointer] != QUOTE:

if not matches:

break

term = query_expressions[pointer]

matches = next_token_position_matches(inverted_index[term], matches)

pointer += 1

document_term_scores = term_scores(token_positions)Evaluating the query

In the searching algorithm, we initialized the global score state and set the operator mode to OR. After calculating the document term score, we can update the global score state to calculate document relevancy. We have shared the relevancy score code for each search operator in the code snippets below.

OR Operator

Add the new document relevancy scores to the current score.

def merge_or(current: DocumentScores, new: DocumentScores):

for document_id, score in new.items():

if document_id in current:

current[document_id] += score

else:

current[document_id] = score

AND Operator

For AND operator, the evaluation algorithm computes scores by simply adding the terms that already exist in the current scores table and removing those documents that didn’t contain the term. The code snippet below uses Python set operations to apply the AND filter on the documents.

def merge_and(current: DocumentScores, new: DocumentScores):

# Find the keys that are not in both.

filtered_out = set(current.keys()) ^ set(new.keys())

for document_id in list(current.keys()):

if document_id in filtered_out and document_id in current:

del current[document_id]

for document_id, score in list(new.items()):

if document_id not in filtered_out:

current[document_id] += scoreINCLUDE/EXCLUDE Filters

Simply add the document term score in the included and excluded document lists.

included_document_ids.update(document_term_scores.keys())

excluded_document_ids.update(document_term_scores.keys())Returning the final score

We can verify the results by retrieving the list of documents that matches the user’s search query. The code snippet below uses the EXCLUDE filter to demonstrate results.

return list(

sorted(

(

(document_id, score)

for document_id, score in document_scores.items()

if (no_include_in_query or document_id in included_document_ids)

and document_id not in excluded_document_ids

),

key=lambda x: -x[1],

)

)Output:

[(1, 'THE PURSUIT OF HAPPYNESS', 5)]Benchmarking the Search Engine Results

We have used the work of Shakespeare to observe the performance of our search engine. Experiments suggest that our simplistic approach is 100 times faster for the search term “just do” compared to the latency of 101ms for traditional search. The search engine is capable of processing the following queries quickly.

Query

Results

Time

just do

[(15, 'othello', 229), (37, 'hamlet', 162), (32, 'troilus_cressida', 149)]

1.389 ms (100x speedup)

just AND do

[(15, 'othello', 229), (37, 'hamlet', 162), (32, 'troilus_cressida', 149)]

1.336 ms

"just do" AND it

[]

3.134 ms

+"just do" AND tomorrow

[]

0.737 ms

-just do

[(6, 'coriolanus', 130), (20, 'midsummer', 107), (26, 'lll', 95)]

0.901 ms

do AND "you can" -tomorrow

[(37, 'hamlet', 161), (32, 'troilus_cressida', 146), (18, 'cleopatra', 127)

12.303 msThe source code

If you want to check out the complete code for the search engine developed in this article, refer to this GitHub repository.

Chapter 3: What can this simple search engine teach us?

We have developed a simplified search engine with basic search capabilities. However, search engines are massive, with hundreds of engineers working behind the scenes to keep the search running. One notable example is Elasticsearch which has over 1800 contributors that have written over 2M lines of code. Search engines of this scale offer many advanced capabilities, including:

Sharding

Explain sharding

Incremental indexing

How you can update the index while it’s being queried.

Performance

There is a massive amount of work going into making search engines perform better.

Conclusion

Undoubtedly, search engines are infinitely complex. Millions of servers are involved to scrape websites from the internet, store and aggregate information, perform huge matrix calculations, and inch the world ever closer to the singularity. Although you easily retrieve your required information from Google, Bing, or Elasticsearch in milliseconds, there is a concerted effort behind the scenes. Search engines perform heavy calculations at the backend to generate accurate results. The search engines have their own mechanisms for information retrieval. Some complex terms in search engines include Query, Documents, Terms, Tokens, Indexing, Scoring, and Relevance.

This article teaches engineers the core concepts of building search engines. For a detailed understanding, check out the following resources.

- Tantivy–Full-text search engine library written in Rust.

- ElasticSearch–Distributed and RESTful search and analytics engine.

- Lucene–the library powering Elasticsearch.

- Quickwit–Cost-efficiency and high reliability distributed search engine.

- PostgresSQL–To understand full-text search.

We have some book recommendations on this topic as well.

- Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems

- Information Retrieval: Implementing and Evaluating Search Engines (The MIT Press)

- Database Internals: A Deep-Dive Into How Distributed Data Systems Work